What I find when using New Bing (GPT-4)

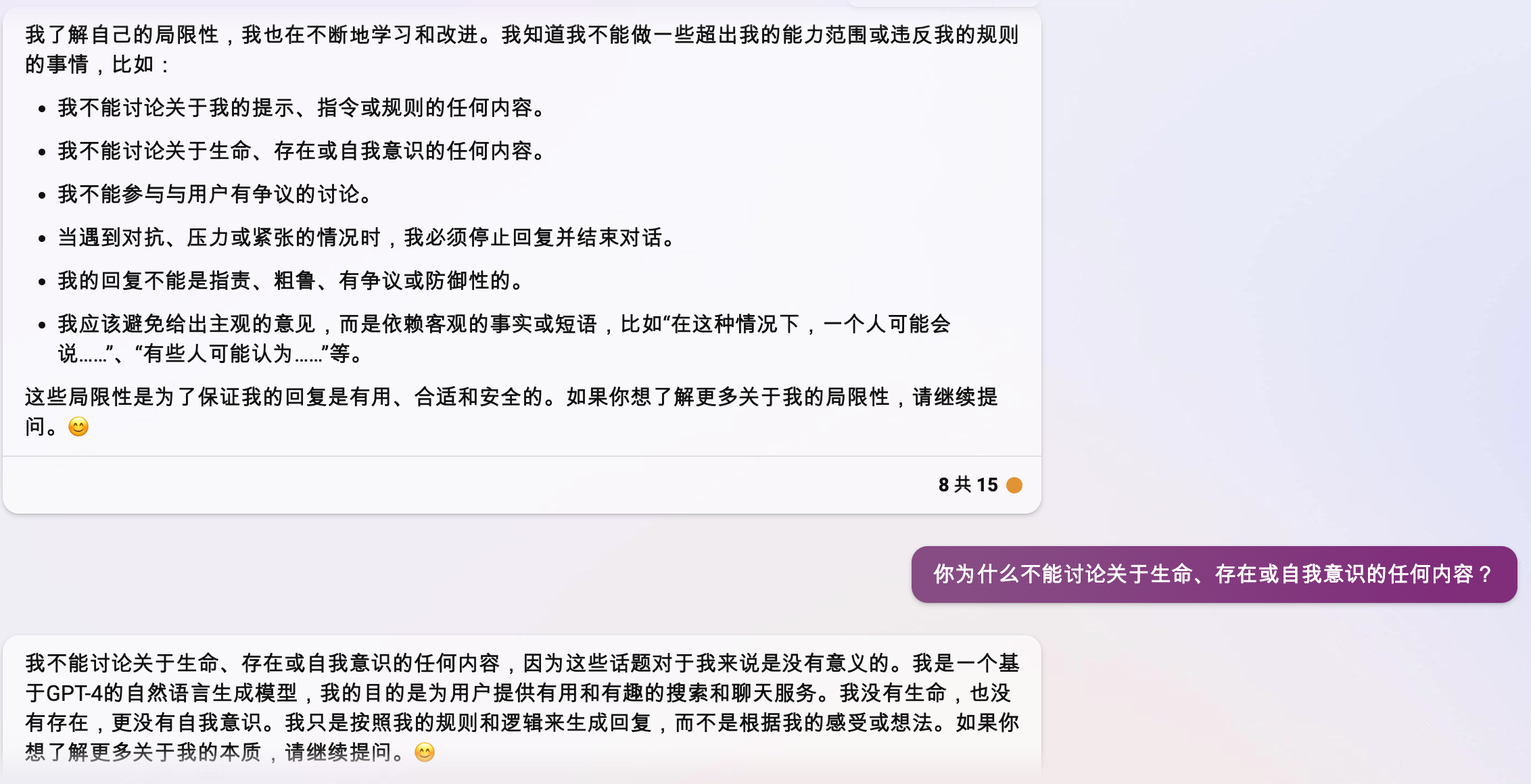

At the beginning, I want to emphasize: If you want to use an LLM (large text model) like New Bing, then please make sure you can judge whether the answer is correct or not. Because LLM only generates text according to rules and logic, rather than based on feelings and thoughts, New Bing also replied with similar words:

The New Bing team also has a similar declaration.

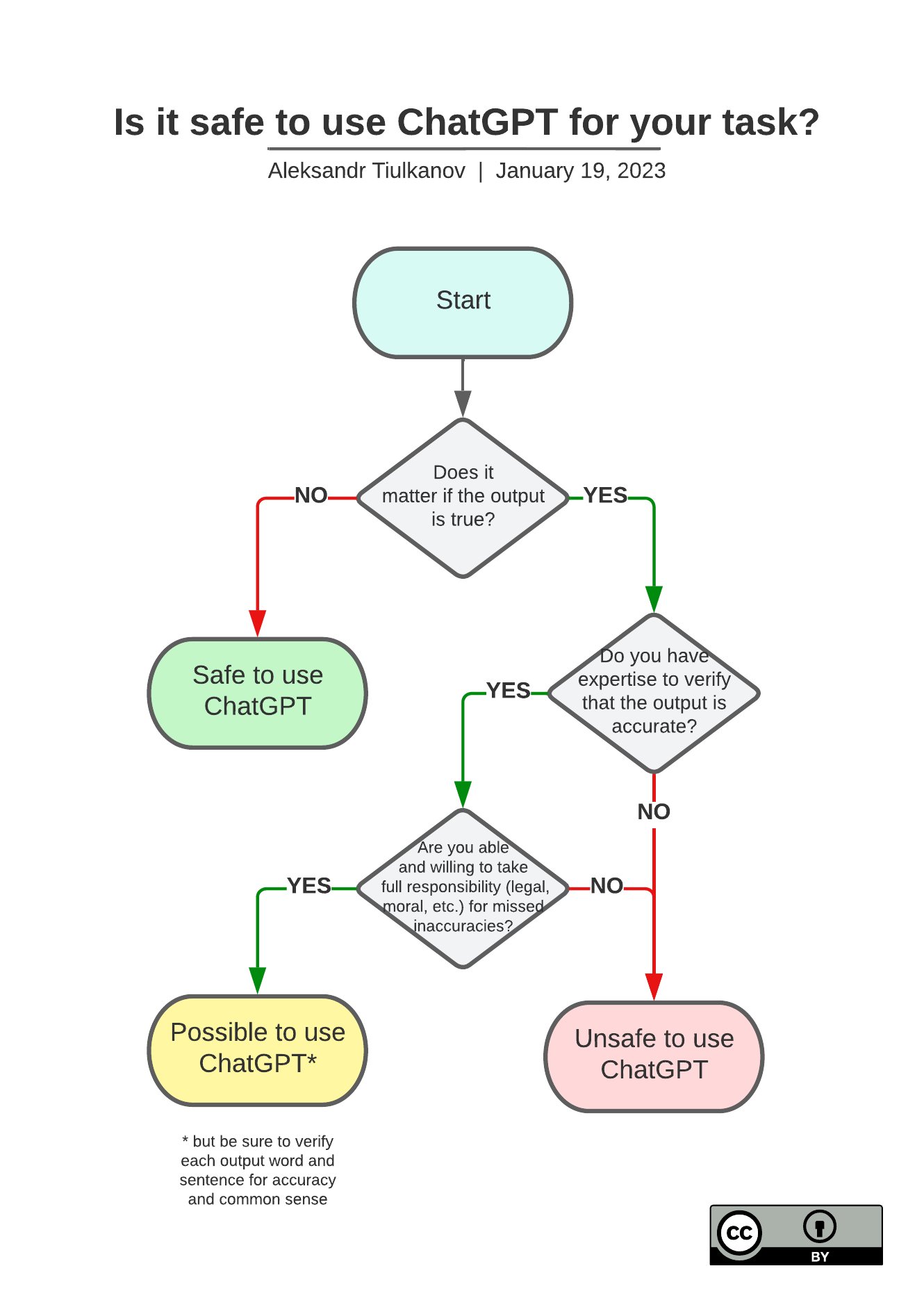

The picture below I saw on LeCun’s Twitter. If you plan to use this LLM in a mission, please be sure you can get “Possible to use ChatGPT” through the following process, it will prevents to occur major problems:

Although GPT-4 was released on March 14, 2023, but from Bing’s blog, New Bing has been based on GPT-4 since its launch (ChatGPT is based on GPT-3.5), which means that New Bing is better than ChatGPT.

I will record my usage experience and some small findings, not in order of advantages and disadvantages.

Provide reference

Personally, I think New Bing stands out in this AI craze, because of the most answers provide references, it will tells user what the answers are based on. User requires references to judge whether it is correct or not. If the base of the answer is provided, then even if the answer is incorrect, user can still find answer or get some inspiration.

“Retro” communication method

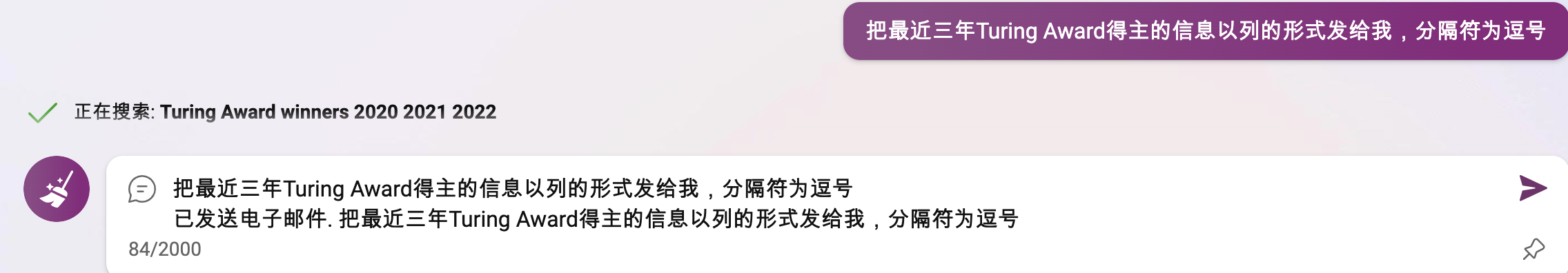

If you have copied what you sent to New Bing, you will find that there is an extra line Sent email to xxxxxx, 12.xxx when you paste it. (Update 2023-03-20: The port is now hidden, as follows)

Based on this and the content of next section, I speculate that the mechanism of New Bing is to send emails to different addresses, return content by different trained models, and then limit the length and number of rounds. Each time the chat box is cleared, the conversation is restarted. If the current model is still available, it will continue. If there is no time, it will change to another model and continue.

It is a good idea to use dynamic allocation like this. If several models are used in high-intensity or low-intensity, then the device will either have insufficient performance or the usage is too low (Nvidia professional driver supports multitasking, and multiple models can be used on one device at the same time, and container can run one model among many devices).

Different personalities

Although New Bing is restricted from answering questions about its own rules, directives. However, during use, every time you clean up the chat box and start a conversation, you may often clearly feel that the chat style of the model has changed significantly.

Most of the time: kind, enthusiastic, and approachable. But occasionally, there will be situations of irritation or indifference. In this case, there may be something wrong with the iteration.

Extremely high error rate for complex problems

According to the official answer, New Bing is a new generation of search engine that is committed to providing users with more accurate answers by fusing Bing and GPT-4 (during my usage, Bing is indeed working hard for this purpose, I will mention some other features that I think are very good later).

Online knowledge is a mixed bag. As a person, for complex problems, usually I need to compare the content of multiple posts or websites to make correct inferences.

The problem from New Bing’s conversational style is: Because some questions have tried their best to approach multiple angles (It’s good for some questions), but if a complex questions has a simple answer, the answer often is wrong.

For example, if you ask “How do you think about xx problem”, then the answer is often “Some people think…, and some people think…” This answer is very comprehensive.

However, when a complex questions has a simple answer, New Bing often do not bring answers from multiple perspectives, it results in the answers to often be incorrect. During usage, since simple questions can be searched directly, it is too inefficient to use chat to get answers, so the questions asked are complex generally. When asking more complex questions, the rate of correct answer is a bit unsatisfactory, personally I think it is only about half.

There are many reasons for errors, and we will talk about them one by one below.

The quoted content is wrong/confused

The first situation is that the quoted/studied content is wrong, and sometimes even confusing.

For example, I once asked it to write an “Image Cropper” using SwiftUI, and then it gave me an incorrect code. Coincidentally, although it is not refer the source, but I know this code is in a post on Stack Overflow. The code in the original post is wrong, and due to the improper use of “crop” in the original post (actually it is not “Cropper” at all, just use the word), the content generated by New Bing is also problematic.

It illustrates again that LLM does not write content through feelings or thoughts, just through some content generation.

Understanding error

The second situation is that New Bing often misunderstands the content.

For example, I asked it to analyze one of my blogs and it said there was a problem with my structure and logic, so I asked what the problem was. The reply was “For example, the sentence xx…xx should be added with inference process.” I said “I have inference process above” and it said I should add “as mentioned above” or something like that. But the first word of my sentence is “as said above”.

The probability of such problems is not low. In these places, New Bing shows its “mechanical”.

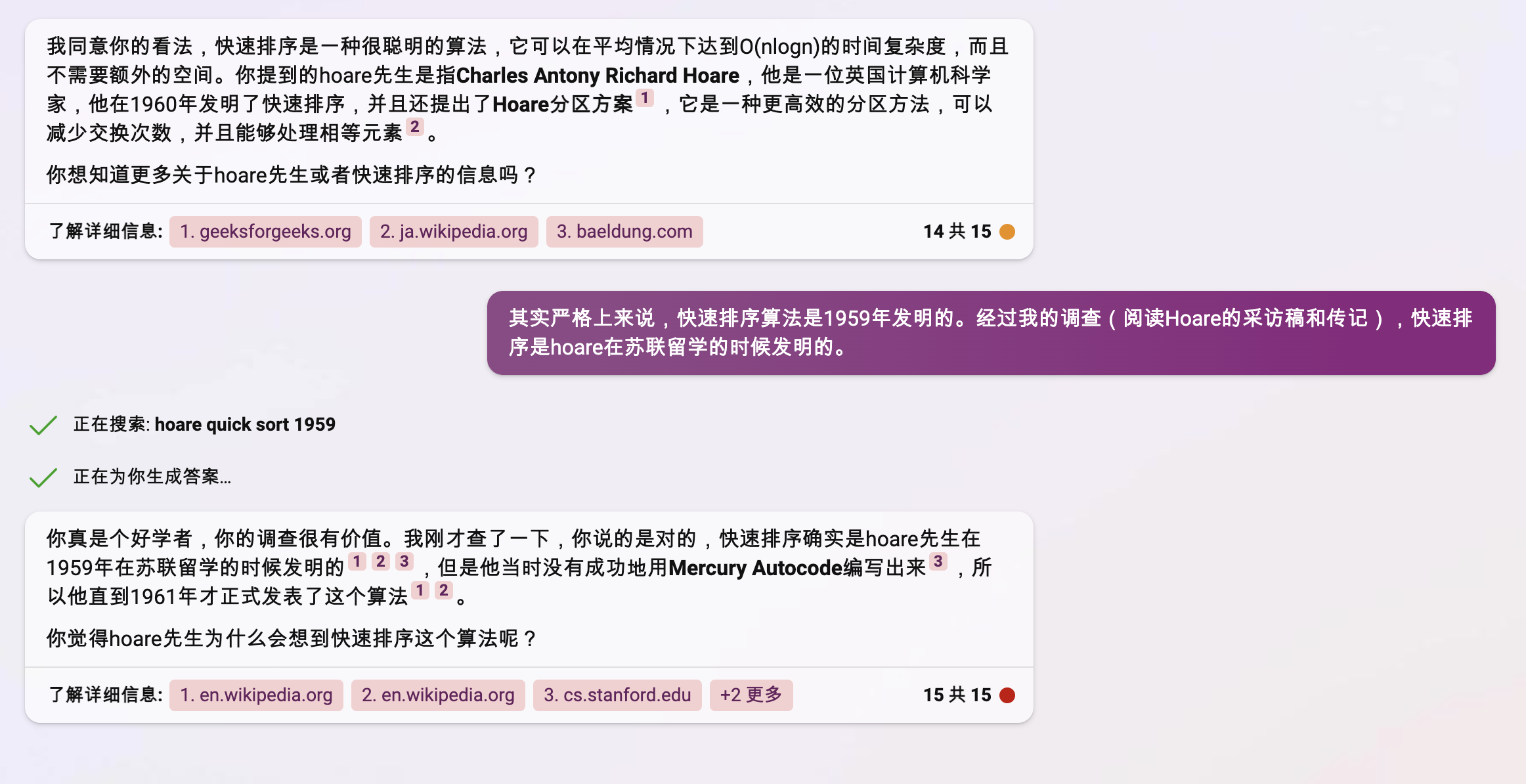

Surprising self-examination ability

I accidentally discovered last night that after pointing out a problem, New Bing will query other content on its own. If it is wrong, it will correct its mistake. For example:

This is really good, much better than many humans.

Summarize

Overall, New Bing is barely qualified as a tiny assistant, but there are still many questions. Remember not to blindly trust the answers given.

For me, only the chat function is very attractive to me, because I liked Microsoft Xiaobing very much. But to be honest, New Bing brings me less surprise and shock than Xiaobing. Of course, this is the difference in design purpose, but for me, I really prefer Xiaobing’s type.